Ensure your AI models are safe, compliant, and explainable from ad hoc experimentation to production AI. Control who can access data across your AI workflows.

When regulators or executives ask how a model reached its decision or what data was used, most teams can’t answer. Models trained on untracked data, evolving features, and unmonitored access put trust, compliance, and performance at risk.

“How did your model make this decision?” becomes an audit nightmare without explainable lineage.

No visibility into who accessed what data for model training creates compliance violations.

Upstream data changes silently reduce accuracy and cause unpredictable results.

Without traceable inputs, there’s no way to prove models avoid protected attributes or proxy bias.

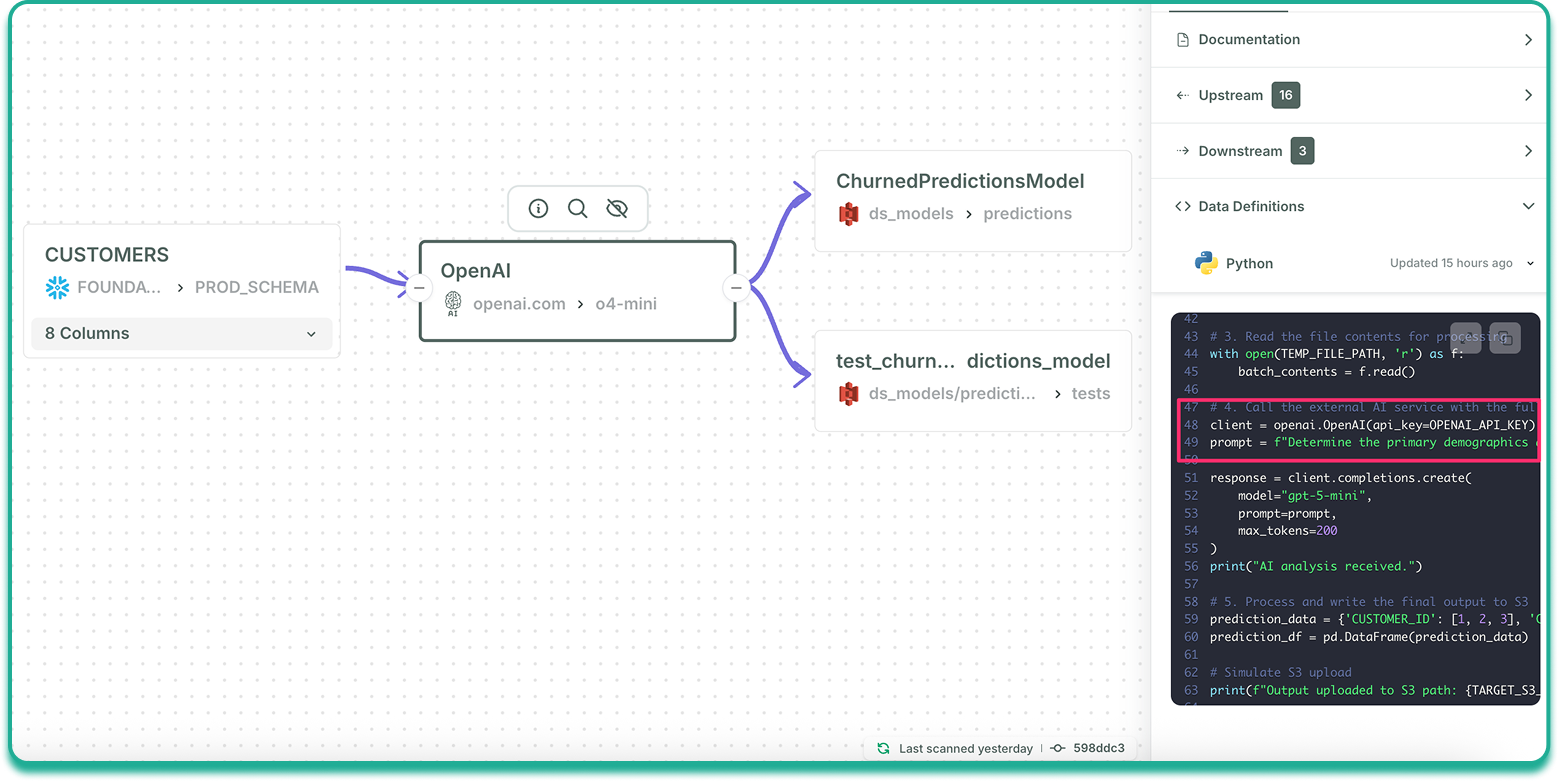

Foundational gives AI teams full traceability from source data to prediction. Every feature, transformation, and model decision is linked to its origin, with audit-ready access records and proactive alerts for changes that impact performance or compliance

Understanding data flows is only useful when teams can act on that insight quickly.

Trace every feature back to its source data. Understand transformations, detect quality issues, and document provenance.

Track and control who accessed what data for AI development. Ensure compliant, auditable access patterns.

Receive alerts when upstream data changes affect features or model accuracy. Prevent degradation before deployment.

Document complete model lineage. Prove fair lending, FCRA, and EU AI Act compliance with one-click reports.

Experiment faster with trusted, governed data. Ensure high-quality, compliant inputs for every model

Monitor and enforce who can access training data. Maintain a full audit trail across teams and projects.

Detect upstream changes that affect models before performance drops. Prevent degradation proactively.

Pass audits confidently with explainable, end-to-end model lineage and evidence of bias prevention