Trace every feature back to its source, validate access permissions, and ensure your models remain explainable, compliant, and fair.

.png)

Governance is embedded in the model lifecycle so teams maintain visibility, control, and compliance without slowing experimentation.

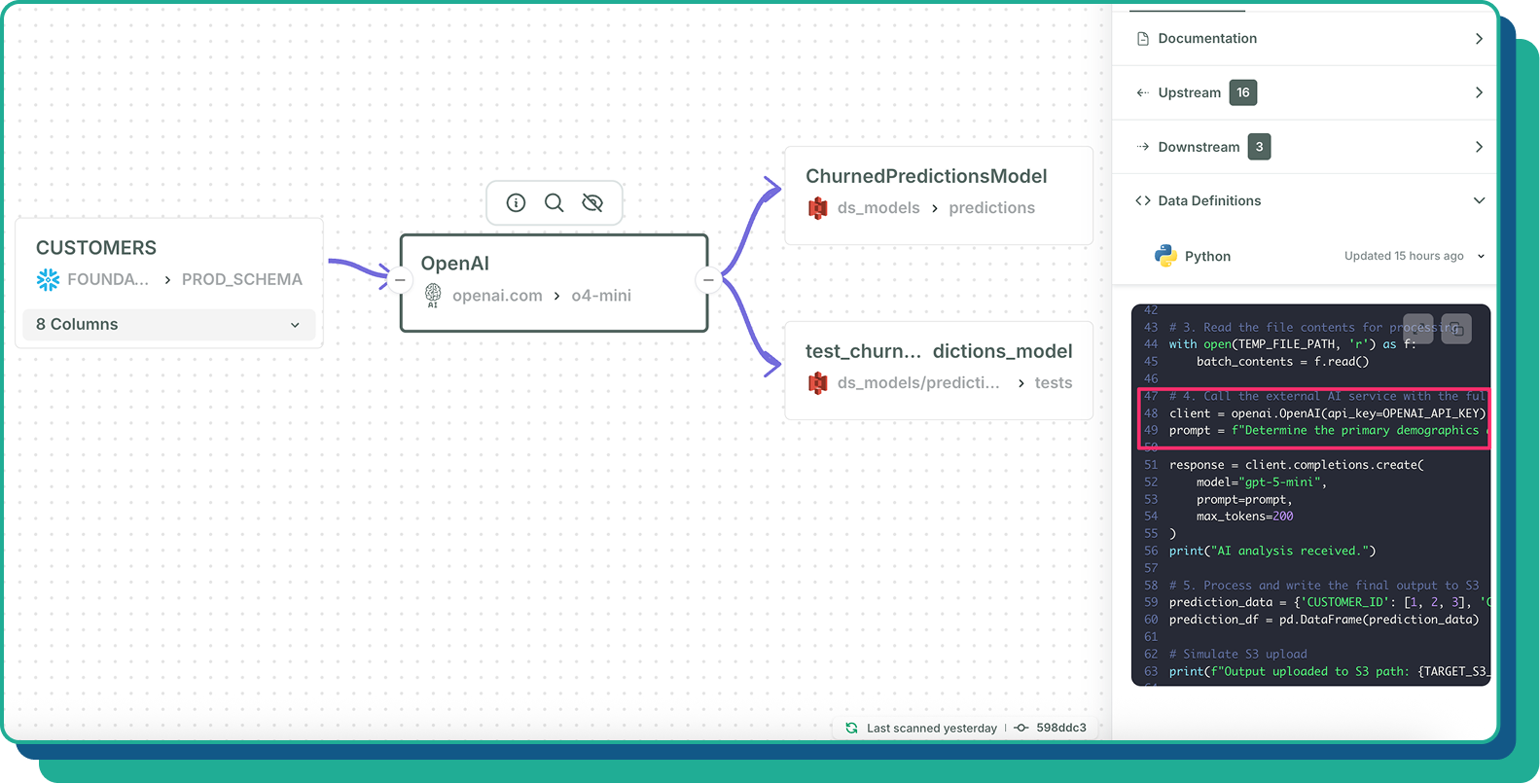

Full traceability and real time checks expose hidden AI activity and ensure every model uses only trusted and compliant data.

Trace every feature back to the tables, transforms, and logic that created it.

Track who accessed which data for model development to support internal and regulatory controls.

Detect upstream shifts before they degrade AI accuracy or reliability.

Generate audit ready documentation with complete feature provenance and interpretation details.

AI governance gives enterprises visibility, control, and guardrails so every AI project uses the right data, stays within its domain, and avoids privacy, safety, and reliability risks.

Get a complete view of which agents and models exist, what data they access, and how they use it so nothing runs out of sight or outside its intended domain.

Enforce boundaries so every AI system only reaches approved datasets. Prevent accidental exfiltration, cross-domain access, and unsafe connections that put sensitive data at risk.

Replace manual request-and-approve workflows with real-time oversight that verifies AI systems follow the data use they were approved for and alerts you when behavior drifts.

Detect and prevent flows that could expose confidential records, violate privacy commitments, or send sensitive attributes into models or agents that should never receive them.

Establish the visibility and controls needed to keep AI reliable, compliant, and aligned with business intent.

Foundational is a new way of building and managing data: We make it easy for everyone in the organization to understand, communicate, and create code for data.

AI governance works by controlling how data, models, and access policies interact across the AI lifecycle. A critical foundation of AI governance is data lineage, which makes it possible to understand which data sources feed models, how changes propagate, and whether models rely on approved and trusted data.

AI governance ensures that every feature, transformation, and training input is traceable, permissioned, and compliant. It connects feature lineage, data access oversight, protected attribute detection, and regulatory reporting into one unified workflow.

The engine parses ML pipelines and feature engineering logic to build a complete lineage map. Every feature can be traced to its raw source tables, intermediate transformations, notebooks, and training steps. This supports debugging, compliance, and model explainability.

Yes. It identifies protected fields and proxy attributes during feature extraction. This supports fairness assessments, regulatory reviews, and responsible AI practices.

No. The system analyzes metadata, code, configurations, and access patterns. It does not scan or store raw datasets, which simplifies security reviews and reduces compliance complexity.